Handling long-running operations with Azure Durable Entities

Long-running operations. Everyone, at some point in their career, has to face a time-consuming task. And on many occasions you also need to know what’s the status and what’s going on right now. Did it fail? Did it complete successfully?

Today we’re going to see a simple way to execute a long-running operation and keep track of the status using Azure Durable Entities.

I feel I’m in some kind of “writing spree” these days. I have 3 other Series still to complete (Blazor Gamedev, Event Sourcing on Azure, and Azure Function Testing), but still, I feel the urge of writing about a different topic. Feels like I’m afraid of losing that thought if I don’t put it “into stone” here on this blog. I’m sure many of you can relate.

And the same happens when I’m working. Although, funnily enough, I spend quite some time wandering through the house, doing apparently nothing. It’s quite hard to explain to my wife that in those moments I’m trying to get rid of the writer’s block and find the right solution for a problem I’m facing.

Coding, as usual, it’s always the last thing, the least important. It’s the byproduct of an ardent, fervent process of creation and design.

Anyways, let’s get back on track! Long-running operations. By definition, they take time. A lot. This of course means that we can’t execute them during an HTTP request, or directly from a UI input. The system will timeout, as well as the user’s patience.

What we can do instead is offload that computation to the background. But still, we need a way to communicate the status to the caller (or any other interested party). For that we have basically two options:

- polling. By exposing a GET endpoint, everyone can poke the system and get the status. Nice, but not very efficient: lots of time and unnecessary HTTP requests wasted. It’s like when you’re working on something very complex and someone is constantly asking you “are we done?”. You’ll also lose time answering.

- pub/sub. Paradigm shift: clients won’t ping the system anymore, but will get informed _directly **_by it when the work is done.

“Are we done?” “We’re done when I tell you we’re done”.

Nothing prevents us to implement both the options, it’s just a matter of taste.

For the actual execution instead, we can make use of Azure Durable Entities. They’ll do the job for us, and at the same time keep track of what’s going on. We talked about Durable Entities already in another post, so I’m not going to introduce them again.

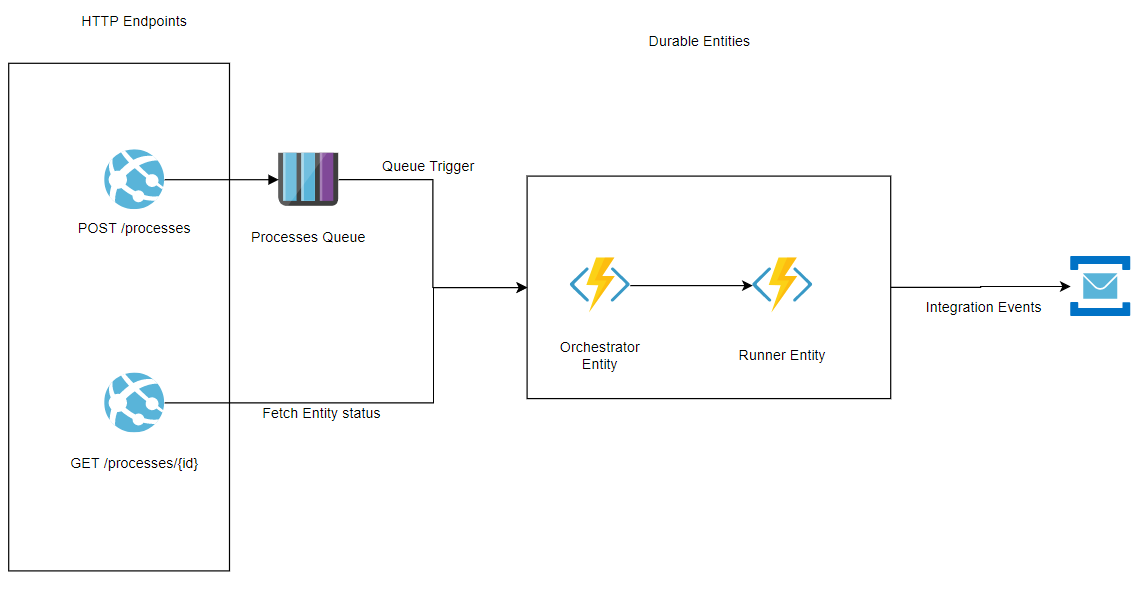

Let’s start from left to right. We have 2 HTTP endpoints: POST-ing to /processes will put a message on a queue and return a 202 straight away. It will also generate an id and set the Location header pointing to the second endpoint.

[FunctionName("RequestProcess")]

public static async Task<IActionResult> RequestProcess(

[HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = "/processes")] HttpRequest req,

[Queue(QueueName, Connection = QueueConnectionName)] CloudQueue encryptionRequestsQueue)

{

var command = new StartOperation(Guid.NewGuid());

var jsonMessage = System.Text.Json.JsonSerializer.Serialize(command);

await encryptionRequestsQueue.AddMessageAsync(new CloudQueueMessage(jsonMessage));

return new AcceptedObjectResult($"processes/{command.RequestId}", command);

}The GET endpoint will return a 200 or a 202 if the system is still processing the operation. We might also decide to return a different status in case of error, it’s up to you.

Now the juicy part: the message on the queue will inform the system that it’s time to roll up the sleeves and do some real work:

[FunctionName("RunProcess")]

public static async Task RunProcess([QueueTrigger(QueueName, Connection = QueueConnectionName)] string message,

[DurableClient] IDurableEntityClient client)

{

var command = Newtonsoft.Json.JsonConvert.DeserializeObject<StartOperation>(message);

var entityId = new EntityId(nameof(LongRunningProcessOrchestrator), command.RequestId.ToString());

await client.SignalEntityAsync<ILongRunningProcessOrchestrator>(entityId, e => e.Start(command));

}The code is pretty straightforward: it deserializes the command from the message and spins up an Orchestrator Entity. This one will serve two purposes: spinning up a Runner Entity and keeping track of the state.

We can’t run the process directly in the orchestrator, otherwise the system won’t be able to properly store the current state.

Better offload (again, yes) the work to someone else:

public void Start(StartOperation command)

{

this.Id = command.RequestId;

this.Status = ProcessStatus.Started;

var runnerId = new EntityId(nameof(LongRunningProcessRunner), command.RequestId.ToString());

_context.SignalEntity<ILongRunningProcessRunner>(runnerId, r => r.RunAsync(command));

}Moreover, this way we can have different types of Runner Entities and trigger one or another based on the input command.

Once triggered, the Runner will do whatever it’s meant to do and then call back the Orchestrator. It might also pass some details about the result of the operation, if needed:

public async Task RunAsync(StartOperation command)

{

// do something very very time-consuming

var orchestratorId = new EntityId(nameof(LongRunningProcessOrchestrator), command.RequestId.ToString());

_context.SignalEntity<ILongRunningProcessOrchestrator>(orchestratorId, r => r.OnCompleted());

}And here’s the final part: in the OnCompleted() method, the Orchestrator will update its state and finally go to rest:

public void OnCompleted()

{

Status = ProcessStatus.Completed;

}This is also the place where you might want to send the integration event to the subscribers, informing them the work is completed (maybe).

The code for a working sample is available on GitHub as usual, let me know what you think. À la prochaine!

Did you like this post? Then